TLDR:K8s serves 99% of homelab needs and more.

Kubernetes in the homelab? Why?

Docker and Podman are currently the most popular single host OCI runtimes, they perform the same as a K8s node. Are easier to set up. and have nice CLI tools to build and run containers, So why should you bother with K8s?

K8s is not just about containers

K8s is mostly known for containers, but thats like saying that Linux is known for EXT4, Its only a small part of the entire ecosystem and doesn’t elaborate the true power of K8s.

K8s is in its heart a resource manager, It has a list of resources it wants vs what exsists. Services can be plugged in to monitor these resource requests and made the required adjustments.

Example, Your typical Ingress

Assume the following,

- Traefik is your Reverse Proxy, monitoring Ingress objects,

- External-DNS is set up and is monitoring your Ingress Objects

- Cert-Manager is set up and is monitoring your Ingress Objects.

This means that 3 resource managers are watching K8s’s pool of Ingress objects for items that are not in sync, you then add.

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

annotations:

cert-manager.io/cluster-issuer: le-prod # Tells Cert-Manager to use Lets Encrypt Production to assign a TLS cert

traefik.ingress.kubernetes.io/router.entrypoints: websecure,web # Tells Traefik that this should be served at 80&443

name: Example

labels:

app: Example

spec:

rules:

- host: example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: example

port:

number: 8080

tls: # < placing a host in the TLS config will indicate a certificate should be created

- hosts:

- example.com

secretName: examplecom-cert # < cert-manager will store the created certificate in this secret

When you push this object, here is what happens

- Traefik will now pass HTTP and HTTPS request to example.com to your example service in the same namespace, It will try to use the cert but it will be empty.

- External-DNS will see that there is a new Ingress that it doesnt have an entry for, it inherits the endpoint location from traefik and will set a Cname from example.com to Traefik’s public DNS record

- Cert-Manager will see that the ingress is attempting to access a cert that doesn’t exist and will use the Issuer LE-PROD (currently set up to use DNS challenge), Cert manager will then talk with LE’s Acme and DNS to get the cert and place it in the examplecom-cert secret and will renew it as needed over time.

- Traefik will see that the secret is now populated and will use that certificate.

This will happen over a span of 2 mins, Cert-Manager with DNS taking the most time. (HTTP challenge would be faster)

But… How do I set it up?

How hands on do you want to be?

Distributions of K8s are a thing, k3s, OKD, and others, KubeAdm is the most technically simple install, which is what I used.

Pick the red pill or the blue pill

Here are your choices

OS: Anything that can run a OCI runtime,

Runtimes: ContainerD or Cri-o (ContainerD has a wider range of supported CRI versions vs Cri-o and supports windows)

Networking Plugin: Most known are Calico and Flannel

Flannel is a simple VXLAN router and works well even if you cant control your router.

Calico is your option if you can control your router and it supports BGP

Storage: NFS-Subdir-Provisoner for simple solutions, Options like Rook and Longhorn if you want a Hyperconverged or more advance setup.

DNS: External-DNS

Certificate managment: Cert-Manager

Load Balancer: Either MetalLB with Arp or BGP or Calico with ExternalServiceIP and BGP

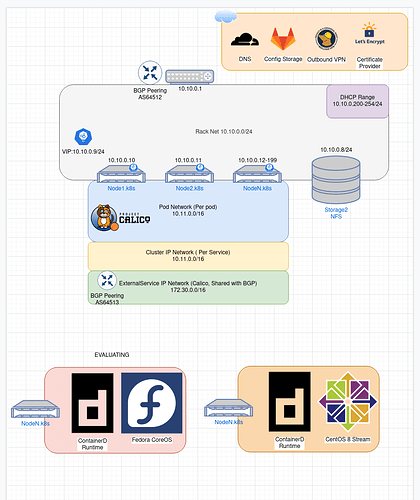

Here is a basic overview of my k8s cluster

All storarge is on my NFS node, I preemtivly use IP 10.10.0.9 to be my API’s address so I can migrate it across nodes as I need to update and when a new Load balancer can be installed.