Self-hosting advanced AI text-to-art

As of writing Stable Diffusion combined with AUTOMATIC1111’s stable-diffusion-webui provides the most advanced AI text-to-art experience that can be self-hosted and many of it’s features don’t exist on any other free or proprietary platforms.

Note: Make sure to install ControlNet below to double the value of stable-diffusion.

Why does this guide use conda?

conda allows you to run python apps with separate python and library versions without conflicts with the OS.

Hardware

This section is a summary limited to my research and experience and shouldn’t be considered the final word.

Recommended: Nvidia GPU with 6GB of VRAM or higher. >=8GB VRAM recommended.

AMD and Intel

While you can run sdw (stable-diffusion-webui) on AMD GPUs, Intel GPUs and Intel CPUs i’ve never done so. AMD GPUs may also need double the VRAM to perform the same actions (citation needed). See the sdw git docs for details.

Nvidia

Ampere series (latest) GPUs are the most recommended for both cost and features but Turing, Volta, Pascal and Maxwell series GPUs should all work. See: cuda support

I recommend the following cards and have used both with stable-diffusion-webui

Cheapest card: Nvidia Quadro P4000 8GB

Best AI all-rounder and value for money (by far): Nvidia RTX 3060 12GB

Resources:

https://en.wikipedia.org/wiki/List_of_Nvidia_graphics_processing_units

https://en.wikipedia.org/wiki/CUDA

Installing drivers

Blacklisting nouveau

# Check if you have nouveau drivers installed

lsmod | grep nouveau

If this command prints anything follow this guide to blacklist nouveau.

Installing drivers

# Check if your card is recognized

lspci | grep VGA

If Linux isn’t pickup up the card make sure the hardware is physically installed correctly with enough power and is operational.

# Check if your card is using nvidia drivers

lsmod | grep nvidia

If there’s no output, follow this install guide for ubuntu or run this for Debian: sudo apt install nvidia-driver

Install cuda and test

sudo apt install nvidia-cuda-toolkit

# Confirm your card is visible

nvidia-smi

# Confirm cuda drivers are installed

nvcc --version

# Reboot

reboot

Install stable-diffusion-webui in a conda environment

Install dependencies

sudo apt update

# Packages used in this guide

sudo apt install wget curl

# Packages used by stable-diffusion-webui

sudo apt install git libgl1 libglib2.0-0 -y

# Optional packages

# (Resolves error: Cannot locate TCMalloc (improves CPU memory usage))

sudo apt install libgoogle-perftools4 libtcmalloc-minimal4 -y

Install miniconda

curl -sL "https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh" > "Miniconda3.sh"

bash ./Miniconda3.sh

# Press Enter to continue

# Press q to quit license

# Type yes to accept license

# Press Enter to confirm install location

# Type no to not have conda auto-initialize on opening a shell

Create a conda environment for stable-diffusion-webui running python 3.10 and activate it

source ~/miniconda3/etc/profile.d/conda.sh

conda create -n sd-webui python=3.10 -y

conda activate sd-webui

Install stable-diffusion-webui

wget -q https://raw.githubusercontent.com/AUTOMATIC1111/stable-diffusion-webui/master/webui.sh

bash ./webui.sh

Wait for downloads to finish, this will take a long time and requires stable internet.

Resolving webui.sh errors (skip if there’s none)

# Cloning Taming Transformers

# For error: "error: RPC failed; curl 56 GnuTLS recv error (-54): Error in the pull function."

cd ~/stable-diffusion-webui/

git config --global http.postBuffer 1048576000

cd ~

# If internet is unstable, loop installation till it completes

while ! bash ~/webui.sh; do sleep 10; done

Test

In another terminal, test if the site is up. You should see pages of HTML.

curl http://127.0.0.1:7860

Exit the active stable-diffusion-webui instance using Ctrl+C

How to launch stable-diffusion-webui in future

The conda environment needs to be activated, then webui.sh can be run.

OPTION 1: Run these commands each time

source "$HOME/miniconda3/etc/profile.d/conda.sh"

conda activate sd-webui

bash "$HOME/webui.sh" -f --port 7860 --xformers

OPTION 2: Make a launch function in your .bashrc

# Paste this block into terminal and press enter

cat <<-'SDLauncher' >> ~/.bashrc

sd-webui() {

source "$HOME/miniconda3/etc/profile.d/conda.sh"

conda activate sd-webui

bash "$HOME/webui.sh" -f --port 7860 --xformers

}

SDLauncher

Close and re-open your terminal, now all you need to run is:

sd-webui

Optional installation of models and enhancers (highly recommended)

# Models

cd $HOME/stable-diffusion-webui/models/Stable-diffusion

wget -c https://huggingface.co/ckpt/rev-animated/resolve/main/revAnimated_v11.safetensors

wget -c https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.safetensors

# VAE

cd $HOME/stable-diffusion-webui/models/VAE

wget -c https://huggingface.co/stabilityai/sd-vae-ft-mse-original/resolve/main/vae-ft-mse-840000-ema-pruned.ckpt

# Pre-download upscaler models

cd $HOME/stable-diffusion-webui/models/ESRGAN/

wget -c https://github.com/cszn/KAIR/releases/download/v1.0/ESRGAN.pth

cd $HOME/stable-diffusion-webui/models/RealESRGAN/

https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth

wget -c https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth

# Embeds for prompt enhancement

cd $HOME/stable-diffusion-webui/embeddings

wget -c https://huggingface.co/yesyeahvh/bad-hands-5/resolve/main/bad-hands-5.pt

wget -c https://huggingface.co/datasets/Nerfgun3/bad_prompt/resolve/main/bad_prompt_version2.pt

wget -c https://huggingface.co/Xynon/models/resolve/main/experimentals/TI/bad-image-v2-39000.pt

wget -c https://huggingface.co/datasets/gsdf/EasyNegative/resolve/main/EasyNegative.pt

Optional: GUI Configuration

Open a browser and go to: http://127.0.0.1:7860

Enable a VAE (if you installed it above):

- On the top bar, click “Settings”

- On the side bar, click “Stable Diffusion”

- Click the “SD VAE” dropdown and choose: vae-ft-mse-840000-ema-pruned.ckpt

- Click “Apply settings”

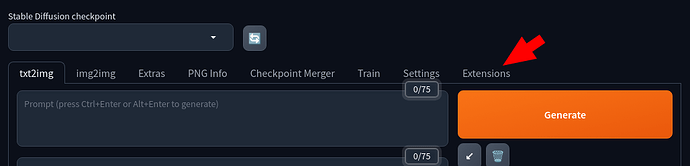

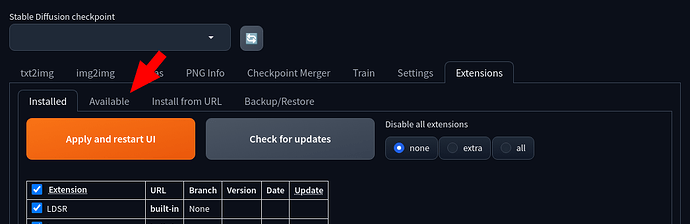

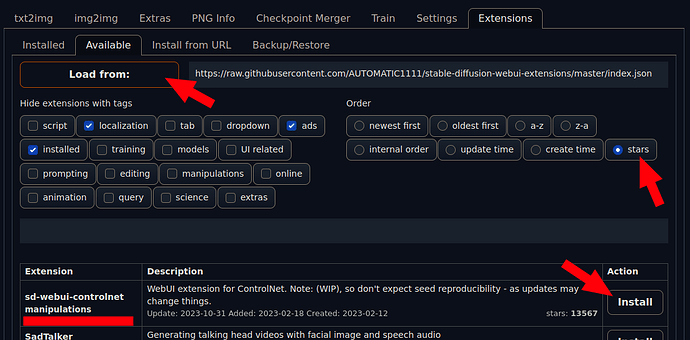

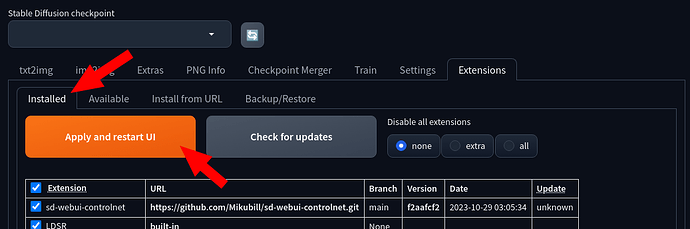

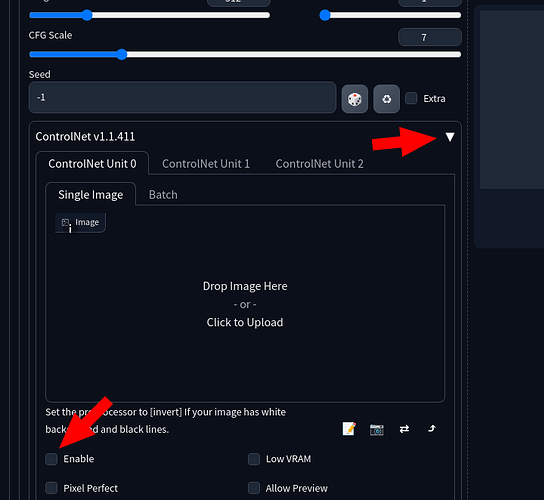

Install ControlNet (and other extensions):

In the txt2img and img2img sections you’ll now have ControlNet

Privacy/Security note

The web interface uses CSS from fonts.googleapis.com and a script from cdnjs.cloudflare.com. These online dependencies can be removed by downloading and localizing the references. I’ll write a script to do this automatically if there’s demand.

Using Stable Diffusion

Renders are stored in: ~/stable-diffusion-webui/outputs/

Example Prompt

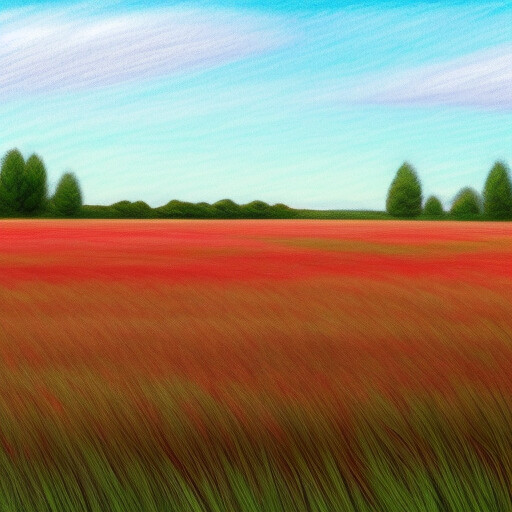

AI model: revAnimated_v11.safetensors

Sampling: Euler a

CFG Scale: 6

Pixel range: 1080x720

Positive Prompt

((best quality)), ((masterpiece)), ((realistic)), (detailed), (cyberpunk), (Ferrari), race cars, blender, intricate background, intricate buildings, ((masterpiece)), HDR

Negative prompt

(semi-realistic:1.4), (worst quality, low quality:1.3), (depth of field, blurry:1.2), (greyscale, monochrome:1.1), nose, cropped, lowres, text, jpeg artifacts, signature, watermark, username, blurry, artist name, trademark, watermark, title, multiple view, Reference sheet